3 reasons to believe the singularity is near

June 3, 2016

When Ray Kurzweil published his book The Singularity Is Near in 2006, many scoffed at his predictions. A year before Apple launched its iPhone, Kurzweil imagined humans and computers fusing, unlocking capabilities seen in science fiction movies.

When Ray Kurzweil published his book The Singularity Is Near in 2006, many scoffed at his predictions. A year before Apple launched its iPhone, Kurzweil imagined humans and computers fusing, unlocking capabilities seen in science fiction movies.

His argument is simple — as tech accelerates at an exponential rate, progress could eventually become instantaneous, a singularity. He predicts as computers advance, they could merge with other tech like genomics, nanotechnology and robotics.

Today Kurzweil’s ideas don’t seem outlandish. Google’s Deep Mind computer algorithm beat game of go world champion Lee Sedol. IBM’s Watson computer system is expanding medicine, financial planning, cooking. Self-driving cars expected on-road by 2020. As Kurzweil predicts, tech seems to be accelerating faster.

Reason no. 1

We’re going beyond Moore’s law.

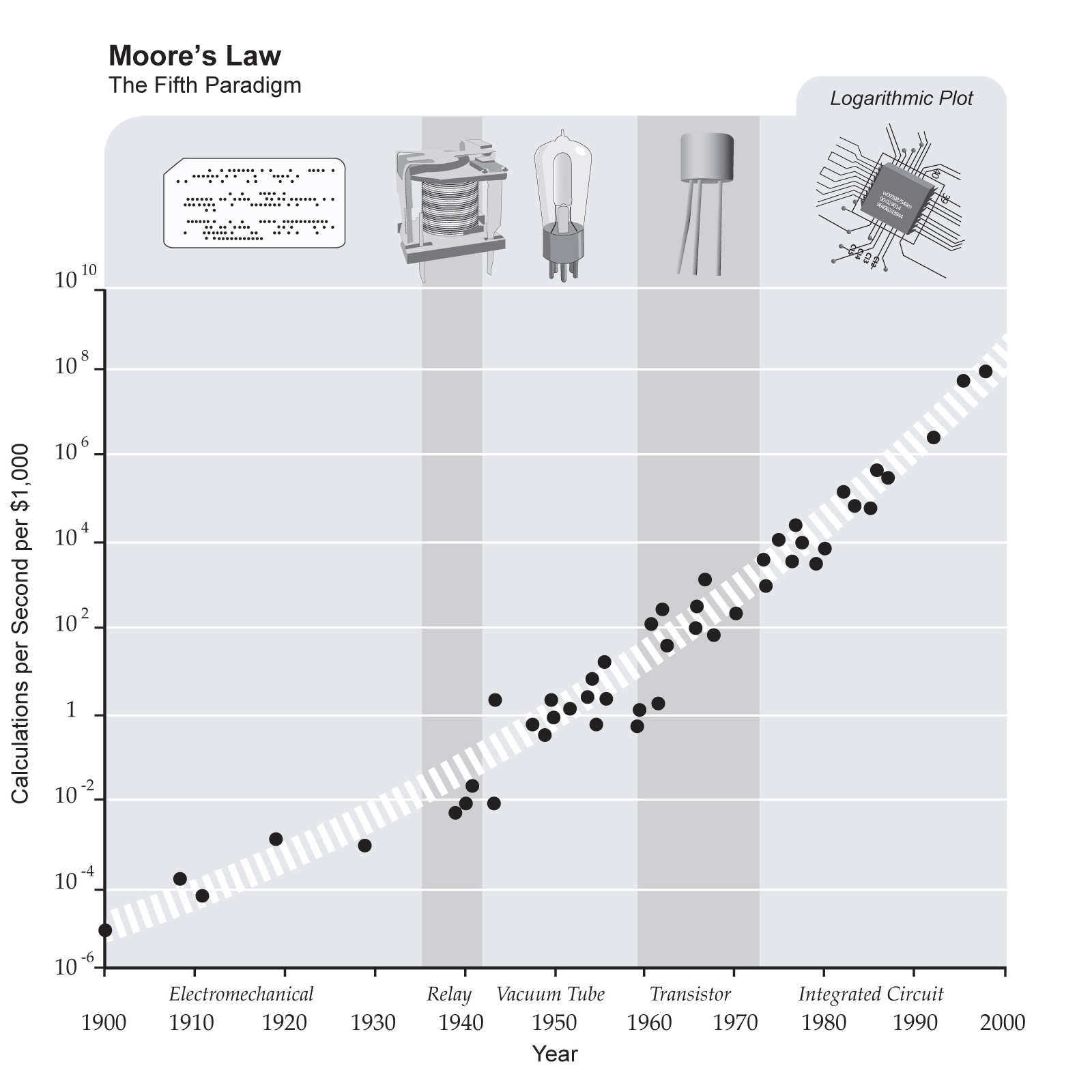

For the last 50 years, the tech industry has run on Moore’s law, the famous prediction made by Intel co-founder Gordon Moore that the number of transistors on a microchip would double every 18 months. That’s what enabled computers the size of refrigerators to shrink down to devices we hold in our hand.

Now we are approaching the theoretical limit and the process is slowing down. The problem is you can only shrink transistors down so far before quantum effects between atoms cause the transistors to malfunction. While chip technology is still advancing, at some point you can’t cheat mother nature anymore. Moore’s law will come to a halt sometime around 2020.

Kurzweil points out microprocessors are in fact the 5th paradigm of info processing, replacing earlier tech like electro-mechanical relays, vacuum tubes and transistors. He argues the numbers of transistors on a chip is an arbitrary way to measure performance — he looks at the number of calculations per $1000 instead.

It turns out he’s right. While the process of cramming more transistors on silicon wafers is indeed slowing down, we’re finding a variety of ways to speed up overall performance, such as quantum computing, neuromorphic chips and 3D stacking. We can expect progress to continue accelerating, at least for the next few decades.

Reason no. 2

Robots are doing human jobs.

The first industrial robot Unimate first arrived on the General Motor’s company assembly line in 1962, welding auto bodies together. Since then automation has quietly slipped into our lives. From automatic teller machines in the 1970s to the autonomous Roomba vacuum cleaner in 2002, machines are increasingly doing the work of humans.

Today we’re beginning to reach a tipping point. Rethink Robotics makes robots like Baxter and Sawyer, which can work safely around humans and can learn new tasks in minutes. Military robots are becoming common on the battlefield and soldiers are developing emotional bonds with them, even going as far as to hold funerals for their fallen androids.

And lest you think that automation only applies to low-skill, mechanical jobs, robots are also invading the creative realm. One book written by a machine was even recently accepted as a submission for the prestigious Hoshi Shinichi Literary Award in Japan.

The future will be more automated still.The US Department of Defense is already experimenting with chips embedded in soldiers’ brains and Elon Musk says he’s thinking about commercializing similar tech. As the power of technology continues to grow exponentially — computers will be more than 1,000 times more powerful in 20 years — robots will take on even more tasks.

Reason no. 3

We’re editing genes.

In 2003 scientists created a full map of the human genome. For the first time we actually knew which genes were which and could begin to track their function. Just 2 years later in 2005, the US government started compiling the Cancer Genome Atlas, which allows doctors to target cancers based on their genetic make-up rather than the organ in which they originate.

Now scientists have a new tool at their disposal called CRISPR, that allows them to actually edit genes, easily and cheaply. It is already opening up avenues to render viruses inactive, regulate cell activity, create disease resistant crops and even engineer yeast to produce ethanol that can fuel our cars.

The tech is also creating controversy. When you start editing the code of life, where do you stop? Are we soon going to create designer babies with predetermined eye color, intelligence and physical traits? Should we alter the genome of mosquitoes in Africa so they no longer carry the malaria virus?

These types of ethical questions used to be mostly science fiction, but as we hurtle toward singularity, they are becoming real.

The truth is the future of technology is all too human. The idea of approaching a technological singularity is both exciting and scary. While the prospects of technologies that are hundreds of times more powerful than we have today will open up new possibilities, there are dangers. How autonomous should we allow robots to be? Which genes are safe to edit, and which are not?

Beyond opening up a Pandora’s box of forces that we may not understand, there is already evidence tech is destroying jobs, stagnating incomes and increasing inequality. As the process accelerates, we will begin to face problems tech cannot help us with, such as social strife created by those left behind, and others in developing countries who will feel newly empowered and demand a greater political voice.

We will also have to change how we view work. Much like in the industrial revolution when machines replaced physical labor, new technologies are now replacing cognitive tasks. Humans will have to become adept at things machines can’t do — namely dealing with humans, and social skills will trump cognitive skills in the marketplace.

While technologies will continue to become exponentially more powerful, the decisions we make are still our own.

![]() Highlighted reading:

Highlighted reading:

Articles referenced by Greg Satell, Forbes author & founder of Digital Tonto.

Digital Tonto | main

Digital Tonto | founder & author: Greg Satell

Digital Tonto | articles: accelerating returns

Digital Tonto | articles: Moore’s law

Digital Tonto | article: “Moore’s law will soon end, but progress doesn’t have to”

Digital Tonto | article: “Cloud computing just entered new territory”

Digital Tonto | article: “IBM revolutionary new model for computing, the human brain”

Digital Tonto | article: “Creative intelligence”

Digital Tonto | article: “Biggest world problems aren’t what you think, will require collective solutions”

Digital Tonto | article: “Why we seem to be talking more and working less, the nature of work has changed”

Digital Tonto | article: “Why atoms are the new bits”

Digital Tonto | article: “Why the future of tech is all too human”

Digital Tonto | article: “Why social skills are trumping cognitive skills”

Digital Tonto | article: “3 big technologies to watch next decade: genomics, nanotech, robotics”

Digital Tonto | article: “4 major paradigm shifts will transform future of tech”

Digital Tonto | article: “5 trends that will drive the future of tech”

Digital Tonto | article: “5 powerhouse industries of the 21 century”